L

Log MCP Agentic Analysis

by @shahraj91

MCP server by shahraj91

Created 2/21/2026

Updated about 21 hours ago

README

Repository documentation and setup instructions

Log MCP -- Agentic Log Analysis System

A local Model Context Protocol (MCP) server + client system for analyzing large log files using a hybrid architecture:

- Deterministic signal extraction (parsing, aggregation, clustering)

- Tool abstraction via MCP

- Optional agentic reasoning via OpenAI Agents SDK

- Structured output + visual graph generation

This project simulates how modern production log triage systems are built.

Why This Project Exists

Most AI demos throw raw logs into an LLM.

This system does the opposite:

- Extract structured signals deterministically\

- Expose capabilities as MCP tools\

- Allow optional agentic reasoning on top

This separation mirrors production-grade observability and AI-assisted triage systems.

Features

- Log level aggregation (INFO / WARN / ERROR / FATAL)

- Time-bucketed event histogram

- Error clustering via normalized string similarity

- Graph generation (PNG)

- Local-only mode (no API required)

- Optional agentic integration (LLM-powered triage summaries)

Architecture

local_triage.py (client)

↓

MCP HTTP transport

↓

server.py (tool layer)

↓

Log analysis + graph generation

MCP Tools Exposed

analyze_levelscluster_errorsmake_graph

The client calls these tools and formats the output.

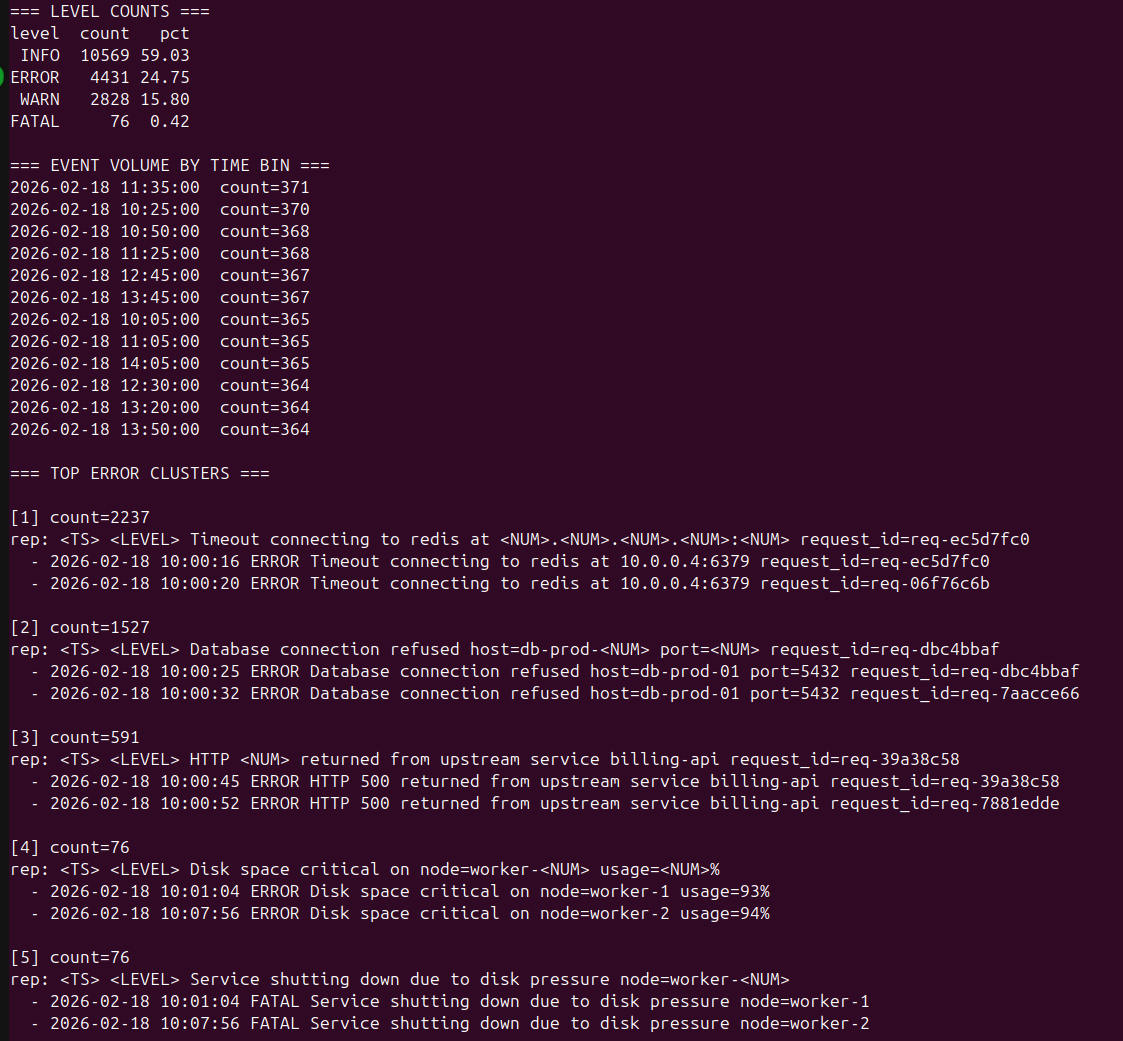

Example Output (15k+ Line Log)

Terminal Analysis Output

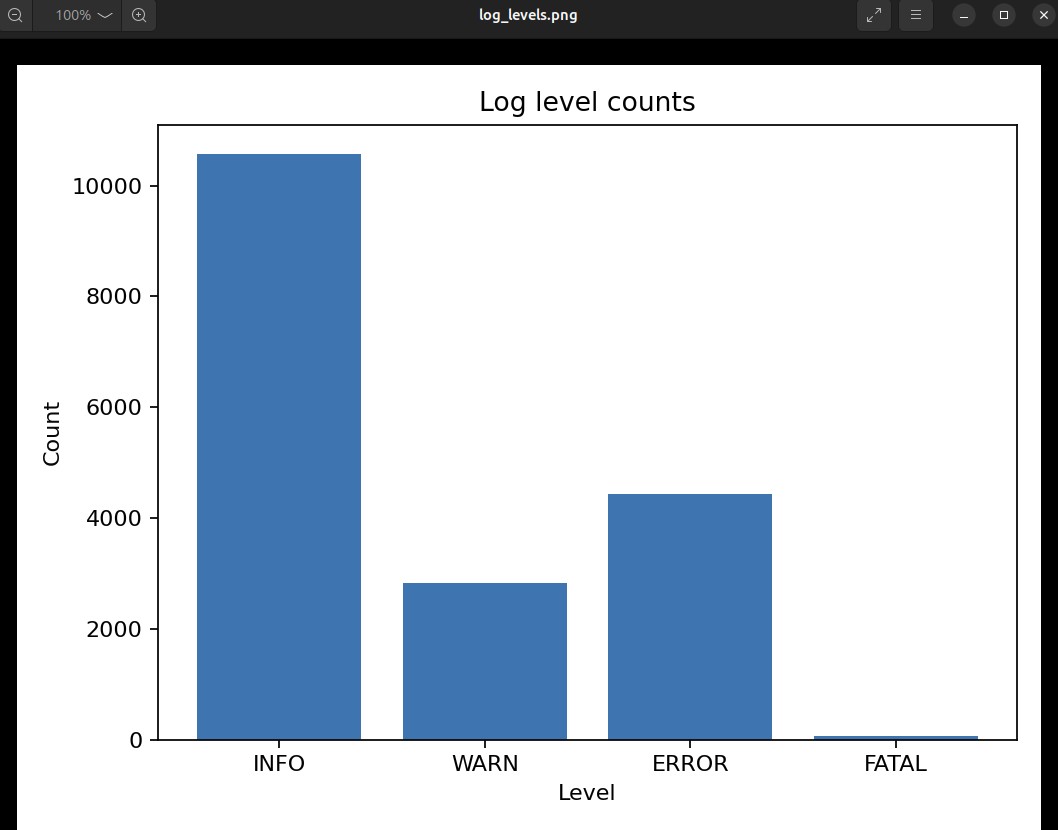

Log Level Distribution Graph

Setup

python3 -m venv .venv

source .venv/bin/activate

pip install -r requirements.txt

Generate Large Synthetic Log

python3 generate_large_log.py

This produces a 15,000+ line realistic production-style log.

Run MCP Server

python3 server.py

Run Local Triage (No OpenAI Required)

In a separate terminal:

python3 local_triage.py --log large_sample.log

Outputs:

- Log level table (counts + %)

- Top error clusters

- Time-bucket summary

- Graph saved as

log_levels.png

Optional: Agentic Mode (Requires OpenAI API Key)

export OPENAI_API_KEY="sk-..."

python3 agent_client.py

This allows the agent to: - Call MCP tools - Summarize root causes - Suggest debugging steps - Classify severity

Tech Stack

- Python 3.12

- MCP (Model Context Protocol)

- Pandas

- Matplotlib

- OpenAI Agents SDK (optional)

Project Structure

log-mcp/

│

├── server.py

├── local_triage.py

├── agent_client.py

├── generate_large_log.py

├── sample.log

├── requirements.txt

├── docs/

│ ├── terminal_output.png

│ └── log_levels.png

└── README.md

Design Philosophy

- Deterministic first

- LLM second

- Clear separation of capabilities and reasoning

- Production-inspired architecture

- Reproducible and inspectable outputs

Future Improvements

- Streamlit dashboard UI

- Docker containerization

- GitHub Actions CI

- Structured test coverage

- Semantic clustering via embeddings

- Real-time streaming log ingestion

License

MIT

Quick Setup

Installation guide for this server

Install Package (if required)

uvx log-mcp-agentic-analysis

Cursor configuration (mcp.json)

{

"mcpServers": {

"shahraj91-log-mcp-agentic-analysis": {

"command": "uvx",

"args": [

"log-mcp-agentic-analysis"

]

}

}

}