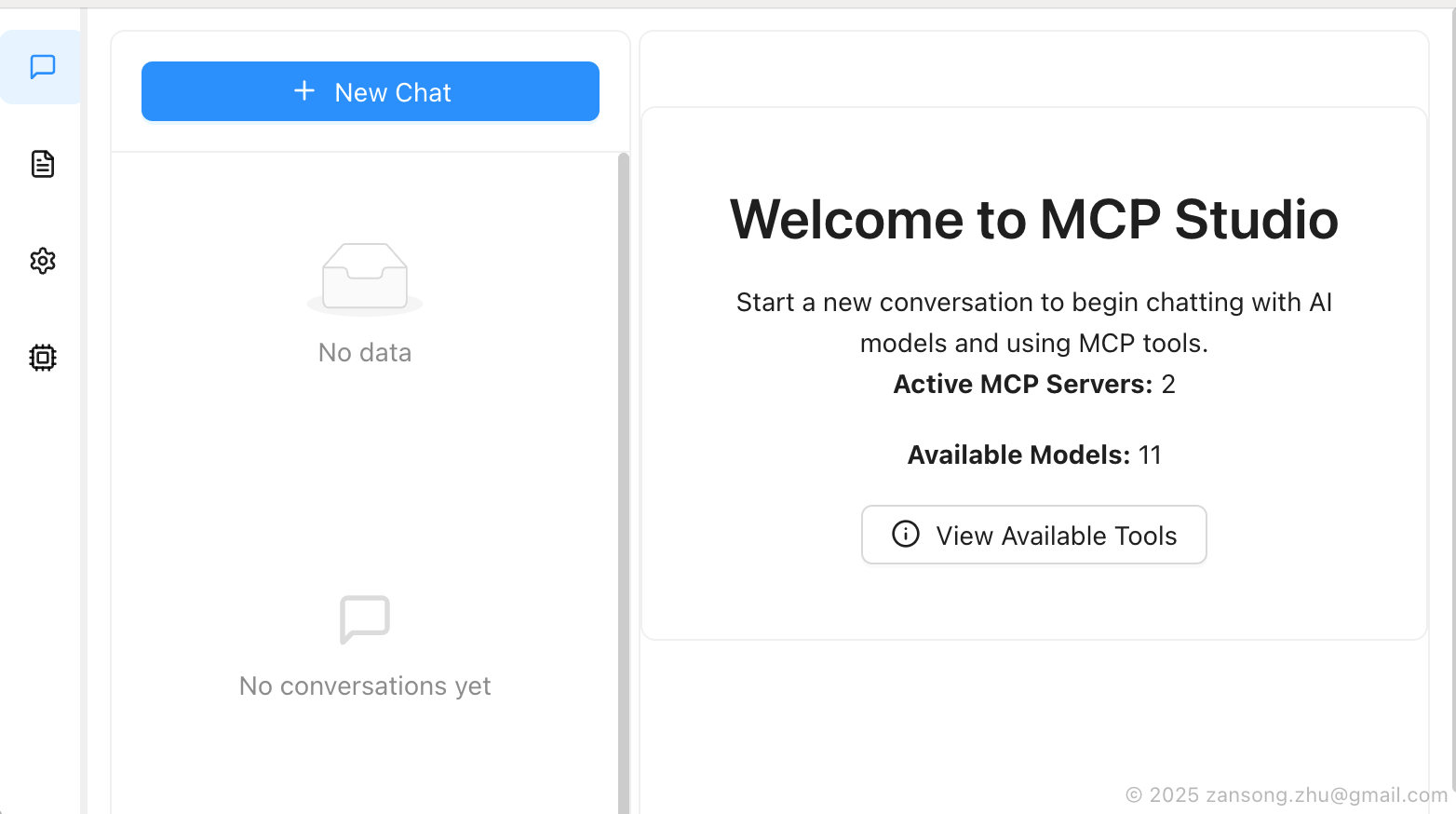

A desktop application built with Electron and React that provides a unified interface for interacting with AI models and Model Context Protocol (MCP) servers.

MCP Studio

A minimal desktop application for working with Model Context Protocol (MCP) servers and AI assistants.

Features

- 🤖 Multi-Provider AI Support: Chat with AI models from Anthropic Claude, OpenAI, Google Gemini, DeepSeek, Qwen and Ollama.

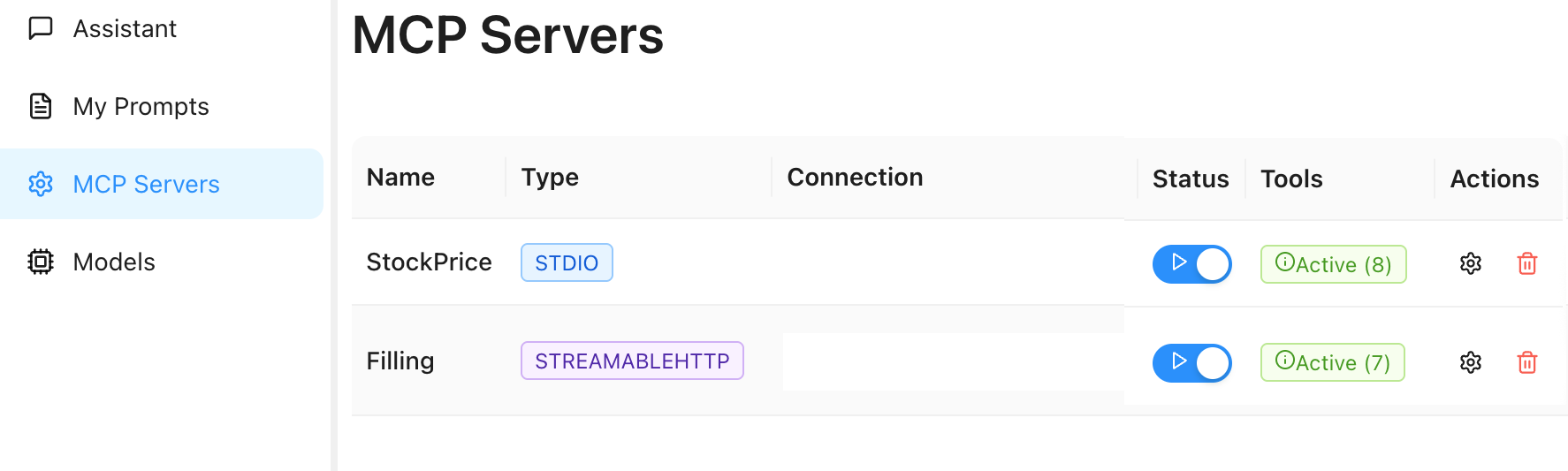

- 🔧 MCP Server Management: Connect to stdio, SSE, and HTTP-based MCP servers with health monitoring

- 📝 Prompt Templates: Create and manage reusable prompt templates with quick-access shortcuts

- 🛠️ Interactive Tool Execution: Real-time MCP tool execution with abort capability and error handling

- 💾 Conversation Persistence: Maintain chat history and conversation data across sessions

- 🔐 Secure Credential Management: Safe storage of API keys and sensitive configuration

- 🖥️ Cross-Platform Desktop: Native builds for Windows, macOS, and Linux

Getting Started

Prerequisites

- Node.js 22.x or higher

- npm or yarn

Installation

- Clone the repository:

git clone https://github.com/ZansongZhu/mcp-studio.git

cd mcp-studio

- Install dependencies:

npm install

- Start development:

npm run dev

Building

Build for your current platform:

npm run build

Platform-specific builds:

npm run build:win # Windows

npm run build:mac # macOS

npm run build:linux # Linux

Configuration

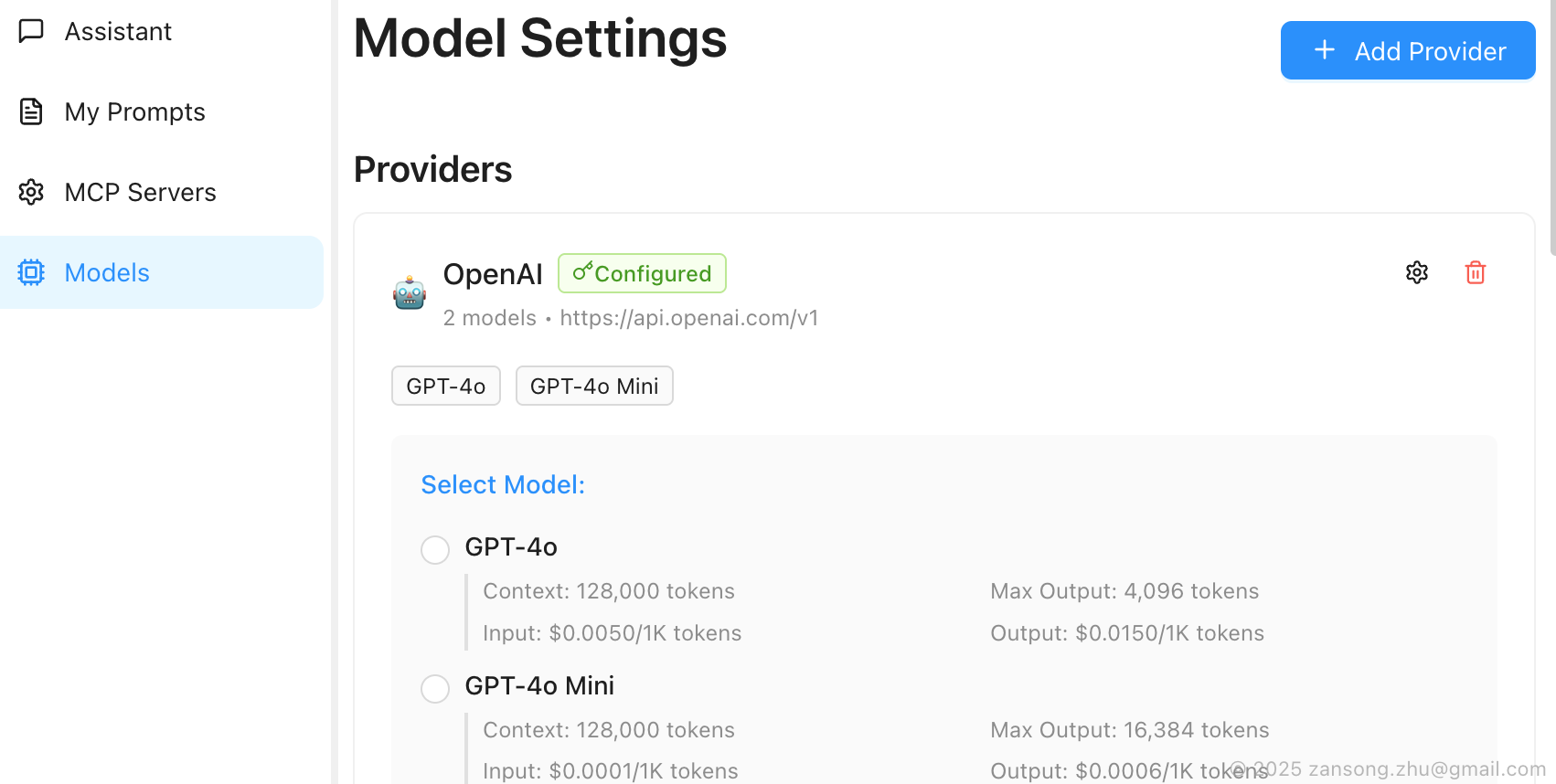

Adding LLM Providers

- Go to the "Models" tab

- Click "Add Provider"

- Enter your provider details:

- Name (e.g., "OpenAI", "Anthropic")

- Base URL (e.g., "https://api.openai.com/v1")

- API Key

Adding MCP Servers

- Go to the "MCP Servers" tab

- Click "Add Server"

- Configure your server:

- Stdio: For command-line MCP servers

- SSE/HTTP: For web-based MCP servers

Example Configurations

File System Server (Stdio):

- Type: Stdio

- Command:

npx - Arguments:

@modelcontextprotocol/server-filesystem

Web Server (SSE):

- Type: SSE

- Base URL:

http://localhost:3000/sse

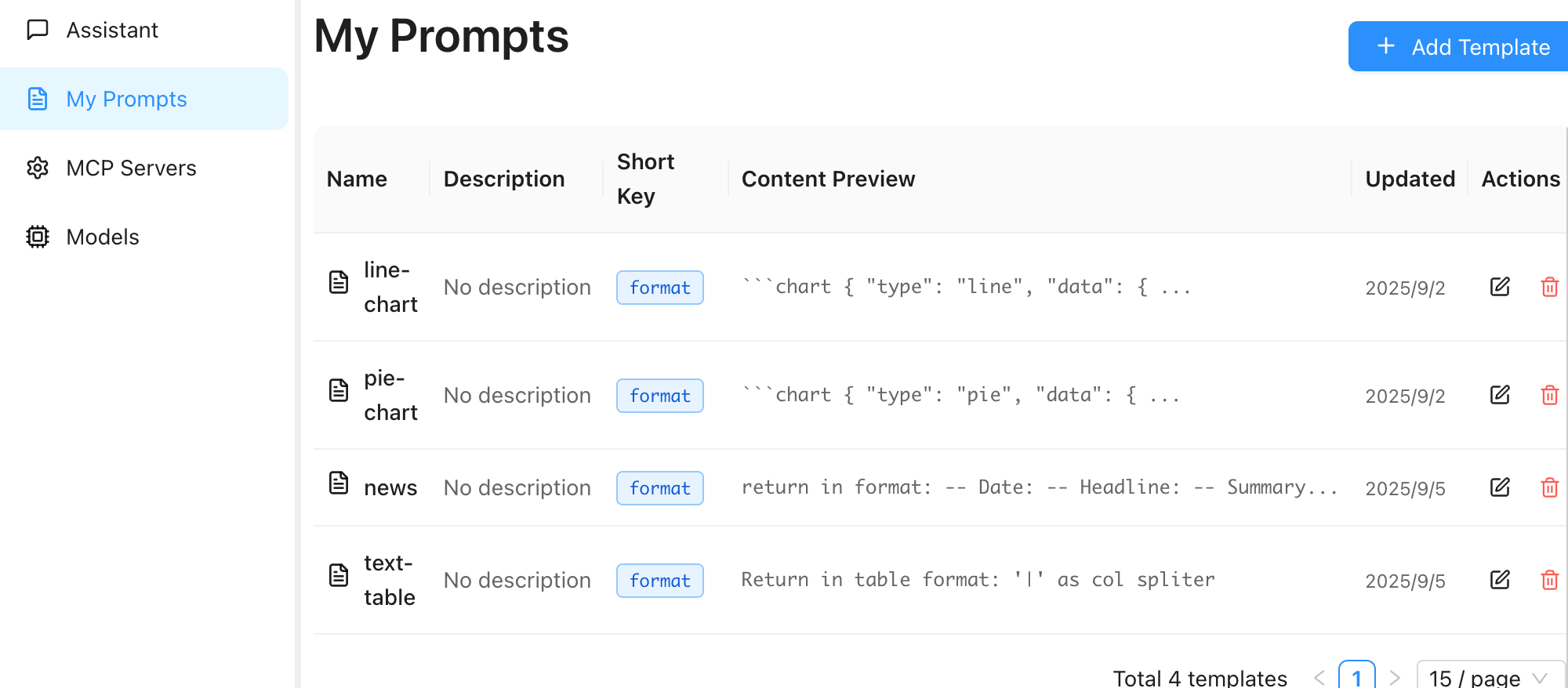

Setup Your Favorite Prompts

You can configure your favorite prompts and assign short keys to use them quickly during chat with LLMs:

- Go to the "Prompt Templates" tab

- Click "Add Template"

- Configure your prompt:

- Name: Give your prompt a descriptive name

- Short Key: Assign a quick shortcut (e.g., "code", "review", "debug")

- Template: Write your prompt template with variables if needed

During chat, you can quickly access your favorite prompts by typing the short key, making your conversations with LLMs more efficient and consistent.

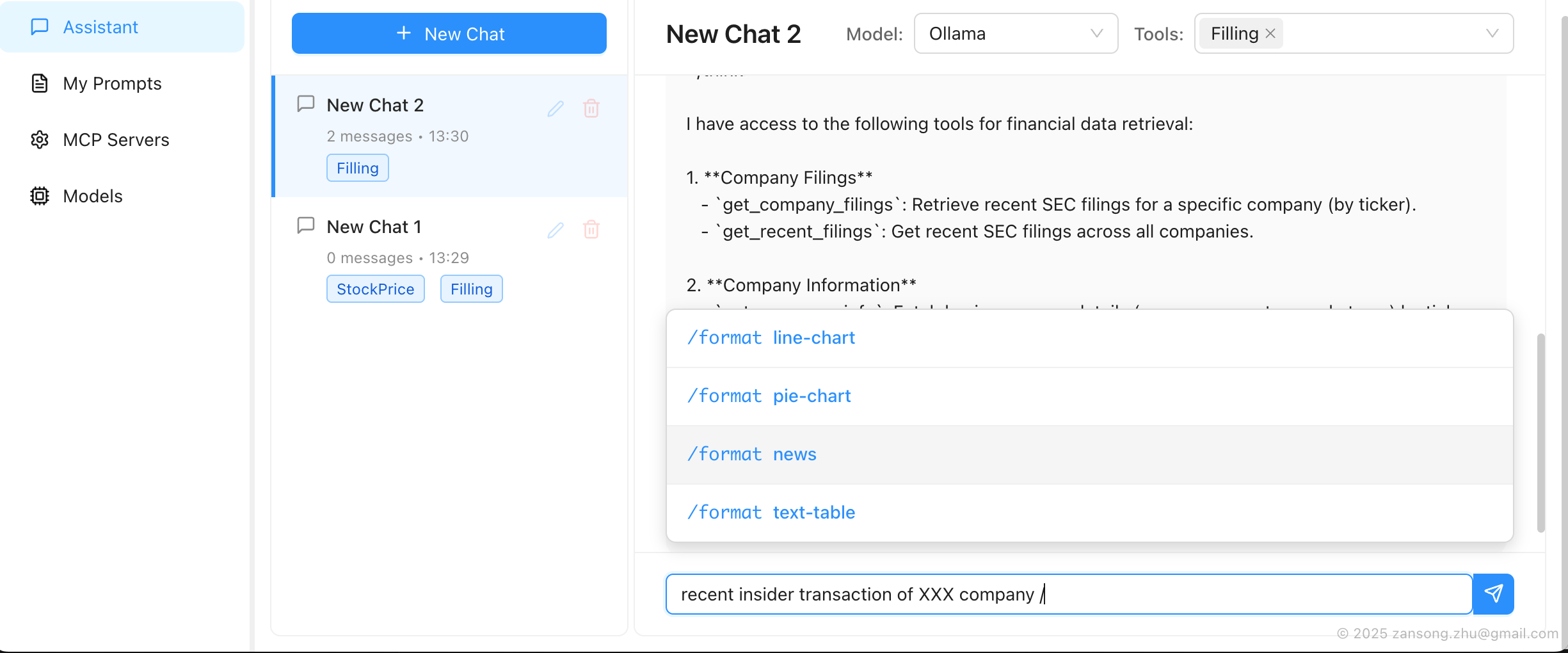

Chat with Model/MCP Tools

Once you have configured your AI providers and MCP servers, you can start interactive conversations that leverage both AI models and MCP tools:

- Go to the "Home" tab to access the chat interface

- Select your preferred AI model from the configured providers and select your preferred MCP servers you want to use in this conversation

- Start chatting - the AI can automatically use connected MCP tools to:

- Access file systems and read/write files

- Execute commands and scripts

- Query databases and APIs

- Perform web searches and data retrieval

- And much more based on your connected MCP servers

The chat interface provides real-time tool execution feedback and maintains conversation history for seamless interactions.

Helpful Tips:

- Type "What available tools?" or key in

/toolsto show all tools available in this session - Use your prompt templates in the message box by typing "/" followed by your template's short key

MCP Resources

Discover more MCP servers and tools to enhance your experience:

- mcp.so - Comprehensive directory of MCP servers and resources

- Awesome MCP Servers - Curated list of community MCP servers

- Official MCP Servers - Official Model Context Protocol server implementations

Architecture

This application is built with:

- Electron: Desktop app framework

- React: UI framework

- TypeScript: Type safety

- Ant Design: UI components

- Redux Toolkit: State management

- MCP SDK: Model Context Protocol integration

Project Structure

src/

├── main/ # Electron main process

│ └── services/ # Backend services (MCP, etc.)

├── renderer/ # React frontend

│ ├── pages/ # Application pages

│ ├── components/ # Reusable components

│ └── store/ # Redux store

├── preload/ # Electron preload scripts

└── shared/ # Shared types and utilities

Contributing

- Fork the repository

- Create your feature branch

- Make your changes

- Test your changes

- Submit a pull request

License

MIT License

Copyright (c) 2025 MCP Studio