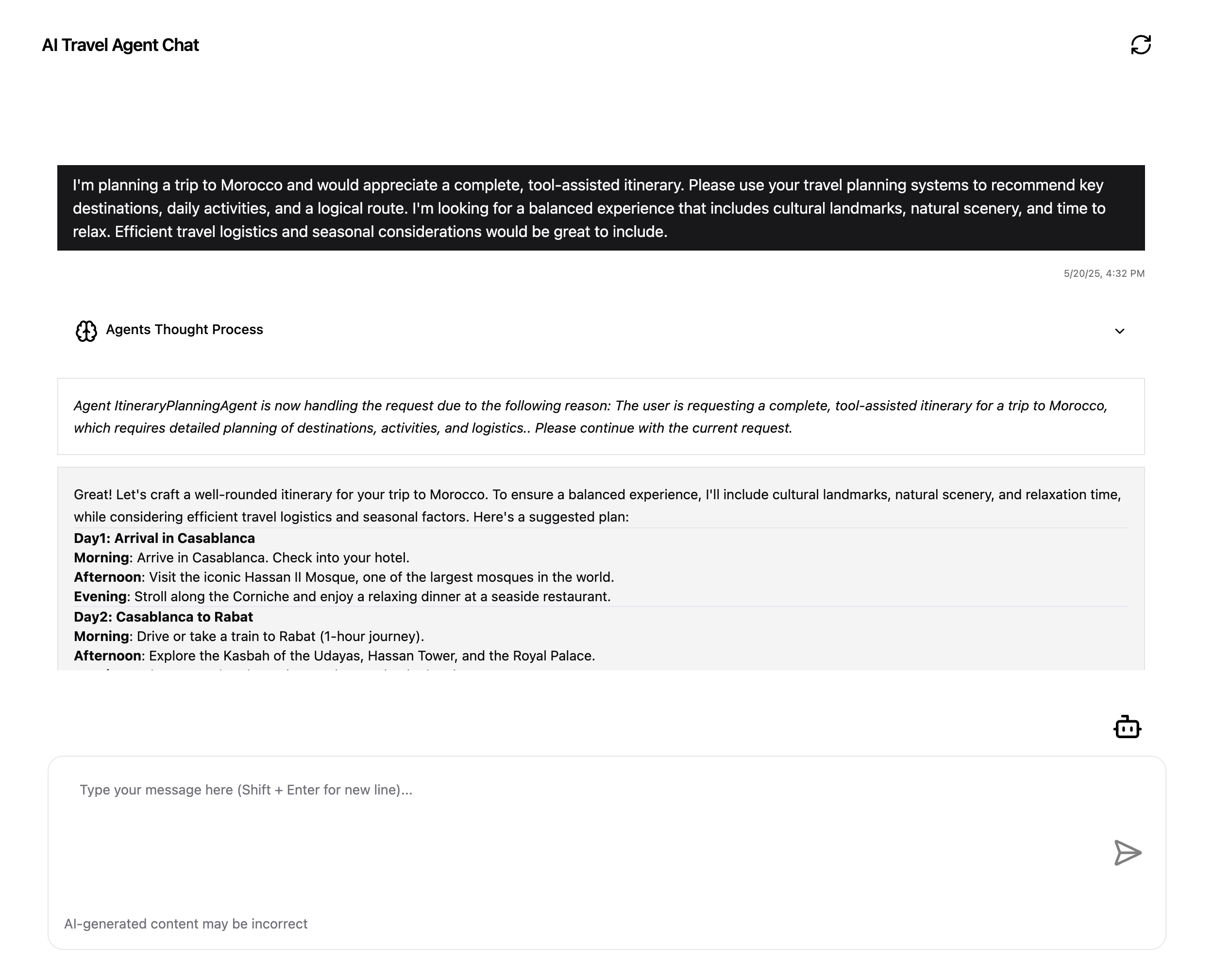

The AI Travel Agents is a robust enterprise application (hosted on ACA) that leverages MCP and multiple LamaIndex AI agents to enhance travel agency operations.

Azure AI Travel Agents with Llamaindex.TS and MCP

:star: To stay updated and get notified about changes, star this repo on GitHub!

Overview • Architecture • Features • Preview the application locally • Cost estimation • Join the Community

Overview

The AI Travel Agents is a robust enterprise application that leverages multiple AI agents to enhance travel agency operations. The application demonstrates how LlamaIndex.TS orchestrates multiple AI agents to assist employees in handling customer queries, providing destination recommendations, and planning itineraries. Multiple MCP (Model Context Protocol) servers, built with Python, Node.js, Java and .NET, are used to provide various tools and services to the agents, enabling them to work together seamlessly.

| Agent Name | Purpose | | -------------------------------- | ----------------------------------------------------------------------------------------------------------------------------- | | Customer Query Understanding | Extracts key preferences from customer inquiries. | | Destination Recommendation | Suggests destinations based on customer preferences. | | Itinerary Planning | Creates a detailed itinerary and travel plan. | | Code Evaluation | Executes custom logic and scripts when needed. | | Model Inference | Runs a custom LLM using ONNX and vLLM on Azure Container Apps' serverless GPU for high-performance inference. | | Web Search | Uses Grounding with Bing Search to fetch live travel data. | | Echo Ping | Echoes back any received input (used as an MCP server example). |

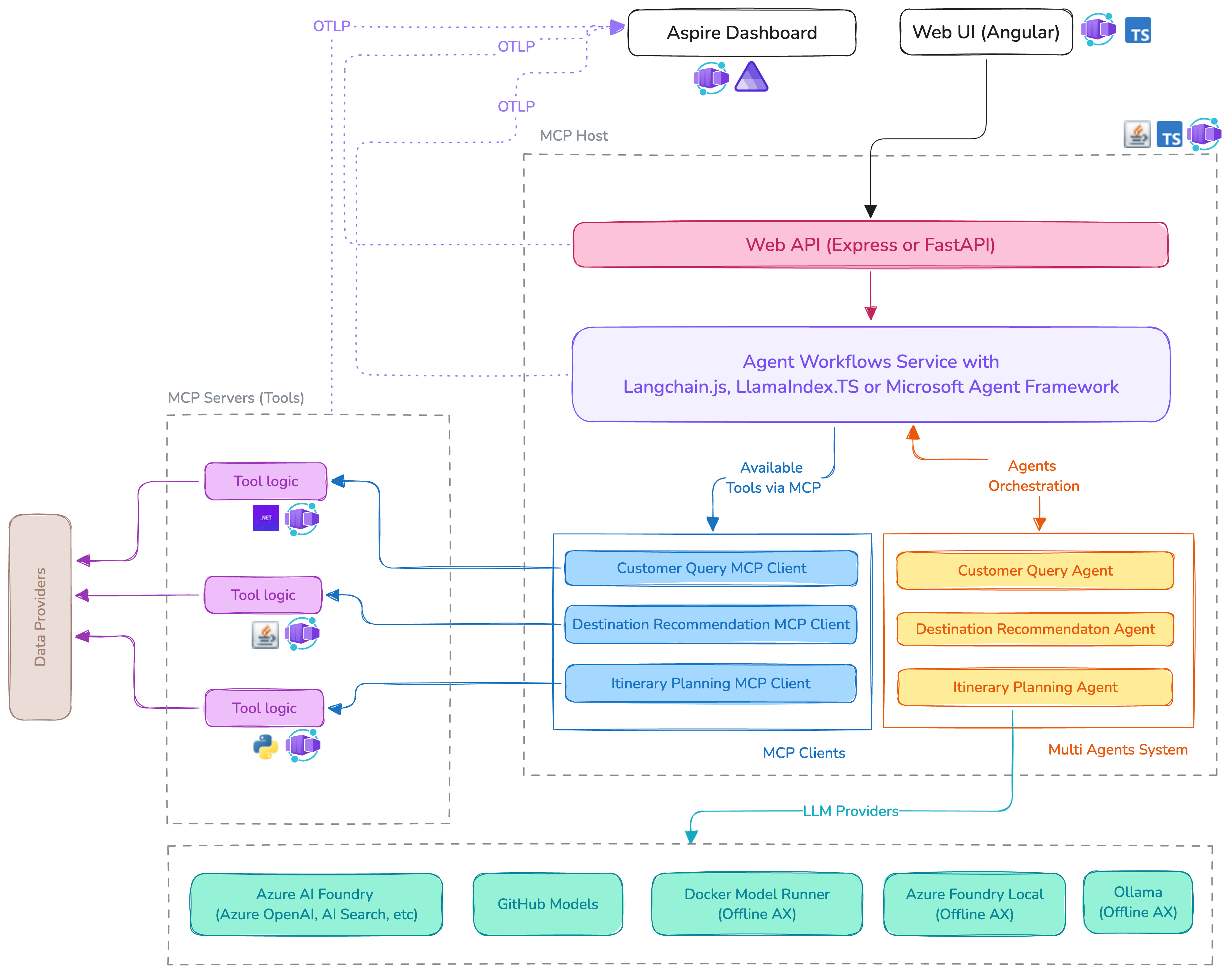

High-Level Architecture

The architecture of the AI Travel Agents application is designed to be modular and scalable:

- All components are containerized using Docker so that they can be easily deployed and managed by Azure Container Apps.

- All agents tools are available as MCP (Model Context Protocol) servers and are called by the MCP clients.

- MCP servers are implemented independently using variant technologies, such as Python, Node.js, Java, and .NET.

- The Agent Workflow Service orchestrates the interaction between the agents and MCP clients, allowing them to work together seamlessly.

- The Aspire Dashboard is used to monitor the application, providing insights into the performance and behavior of the agents (through the OpenTelemetry integration).

[!NOTE] New to the Model Context Protocol (MCP)? Check out our free MCP for Beginners guide.

Features

- Multiple AI agents (each with its own specialty)

- Orchestrated by LlamaIndex.TS

- Supercharged by MCP

- Deployed serverlessly via Azure Container Apps

- Includes an llms.txt file to provide information to help LLMs use this project at inference time (learn more)

Prerequisites

Ensure you have the following installed before running the application:

- Azure Developer CLI

- Docker (v4.41.2 or later)

- Git

- Node.js (for the UI and API services)

- Powershell 7+ (pwsh) - For Windows users only.

- Important: Ensure you can run

pwsh.exefrom a PowerShell terminal. If this fails, you likely need to upgrade PowerShell.

- Important: Ensure you can run

Preview the application locally

To run and preview the application locally, follow these steps:

- Clone the repository:

Using HTTPS

git clone https://github.com/Azure-Samples/azure-ai-travel-agents.git

Using SSH

git clone git@github.com:Azure-Samples/azure-ai-travel-agents.git

Using GitHub CLI

gh repo clone Azure-Samples/azure-ai-travel-agents

- Navigate to the cloned repository:

cd azure-ai-travel-agents

- Login to your Azure account:

azd auth login

For GitHub Codespaces users

If the previous command fails, try:

azd auth login --use-device-code

- Provision the Azure resources:

azd provision

When asked, enter a name that will be used for the resource group. Depending on the region you choose and the available resources and quotas, you may encouter provisioning errors. If this happens, please read our troubleshooting guide in the Advanced Setup documentation.

- Open a new terminal and run the following command to start the API:

npm start --prefix=src/api

- Open a new terminal and run the following command to start the UI:

npm start --prefix=src/ui

- Once all services are up and running, you can:

- Access the UI at http://localhost:4200.

- View the traces via the Aspire Dashboard at http://localhost:18888.

- On

Structuredtab you'll see the logging messages from the tool-echo-ping and api services. TheTracestab will show the traces across the services, such as the call from api to echo-agent.

- On

[!IMPORTANT] In case you encounter issues when starting either the API or UI, try running

azd hooks run postprovisionto force run the post-provisioning hooks. This is due to an issue with theazd provisioncommand not executing the post-provisioning hooks automatically, in some cases, the first time you run it.

Use GitHub Codespaces

You can run this project directly in your browser by using GitHub Codespaces, which will open a web-based VS Code:

Use a VSCode dev container

A similar option to Codespaces is VS Code Dev Containers, that will open the project in your local VS Code instance using the Dev Containers extension.

You will also need to have Docker installed on your machine to run the container.

Cost estimation

Pricing varies per region and usage, so it isn't possible to predict exact costs for your usage. However, you can use the Azure pricing calculator for the resources below to get an estimate.

- Azure Container Apps: Consumption plan, Free for the first 2M executions. Pricing per execution and memory used. Pricing

- Azure Container Registry: Free for the first 2GB of storage. Pricing per GB stored and per GB data transferred. Pricing

- Azure OpenAI: Standard tier, GPT model. Pricing per 1K tokens used, and at least 1K tokens are used per query. Pricing

- Azure Monitor: Free for the first 5GB of data ingested. Pricing per GB ingested after that. Pricing

⚠️ To avoid unnecessary costs, remember to take down your app if it's no longer in use,

either by deleting the resource group in the Portal or running azd down --purge (see Clean up).

Deploy the sample

- Open a terminal and navigate to the root of the project.

- Authenticate with Azure by running

azd auth login. - Run

azd upto deploy the application to Azure. This will provision Azure resources, deploy this sample, with all the containers, and set up the necessary configurations.- You will be prompted to select a base location for the resources. If you're unsure of which location to choose, select

swedencentral. - By default, the OpenAI resource will be deployed to

swedencentral. You can set a different location withazd env set AZURE_LOCATION <location>. Currently only a short list of locations is accepted. That location list is based on the OpenAI model availability table and may become outdated as availability changes.

- You will be prompted to select a base location for the resources. If you're unsure of which location to choose, select

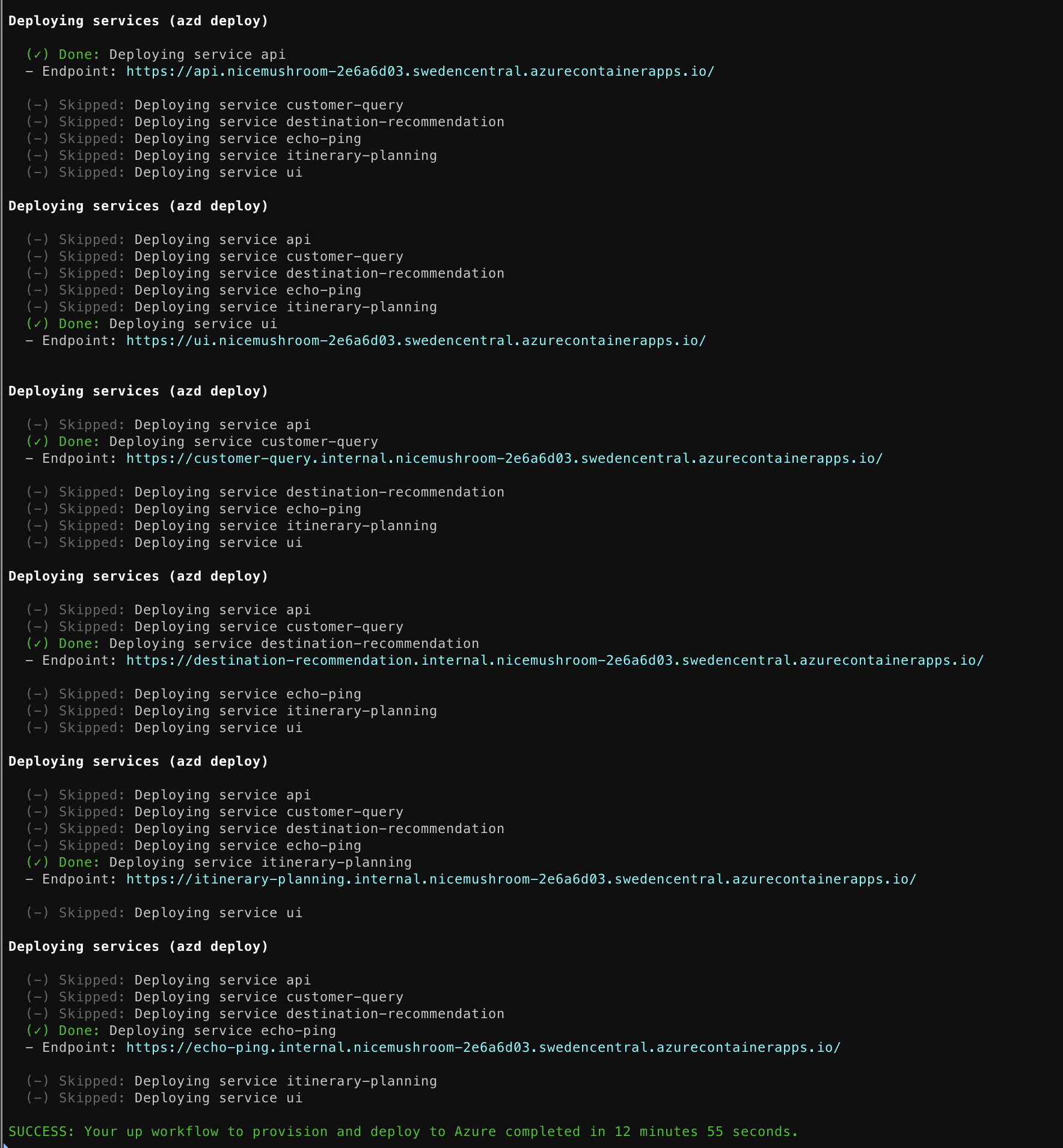

The deployment process will take a few minutes. Once it's done, you'll see the URL of the web app in the terminal.

You can now open the web app in your browser and start chatting with the bot.

Clean up

To clean up all the Azure resources created by this sample:

- Run

azd down --purge - When asked if you are sure you want to continue, enter

y

The resource group and all the resources will be deleted.

Advanced Setup

To run the application in a more advanced local setup or deploy to Azure, please refer to the troubleshooting guide in the Advanced Setup documentation. This includes setting up the Azure Container Apps environment, using local LLM providers, configuring the services, and deploying the application to Azure.

Contributing

We welcome contributions to the AI Travel Agents project! If you have suggestions, bug fixes, or new features, please feel free to submit a pull request. For more information on contributing, please refer to the CONTRIBUTING.md file.

Join the Community

We encourage you to join our Azure AI Foundry Developer Community to share your experiences, ask questions, and get support:

- aka.ms/foundry/discord - Join our Discord community for real-time discussions and support.

- aka.ms/foundry/forum - Visit our Azure AI Foundry Developer Forum to ask questions and share your knowledge.